The Case for Verifiable AI Provenance

AI has become the most crucial technology of our generation, appending every area of human interactions. Yet the foundation it relies on—data and IP created by individuals—remains beyond creators’ control in how AI systems use it. Models train on content scraped without consent or compensation. Artists watch their styles reproduced with no record of whether their work was licensed or stolen. Creators lose attribution as their IP gets remixed through layers of derivative works, with no transparent chain connecting output back to origin.

This isn’t a minor oversight in an otherwise functional system. It’s an existential flaw that undermines the entire promise of AI as a transformative technology. When the most powerful AI systems are built on foundations of IP infringement and data exploitation, we’re not setting standards for innovation—we’re normalizing extraction. One example is Reddit suing Anthropic for infringing on its data rights and training models without consent.

Agent-based systems like ELIZA OS, which operate autonomously across multiple integrations, can generate outputs closely resembling artists’ work without attribution and without any provenance or fingerprinting mechanism to trace their origin. At scale, this gap doesn’t just create legal liability—it makes the entire AI economy unfair by design.

Provenance

Provenance forms the history tree—the creators, the licenses. When a dataset trains a model, provenance tracks which creators contributed which pieces and under what terms. When an agent creates new content, provenance links that output back through the model to the training data. When someone remixes IP into derivative works, provenance maintains the connection to original sources so compensation can flow to everyone in the creative chain.

Verifiability in this system allows models to prove cryptographically that the IP trained upon followed the licensing terms.

Provenance and verifiability enable an ecosystem where AI models can only train on properly licensed data.

What This Architecture Enables

Verifiable Training Data Provenance

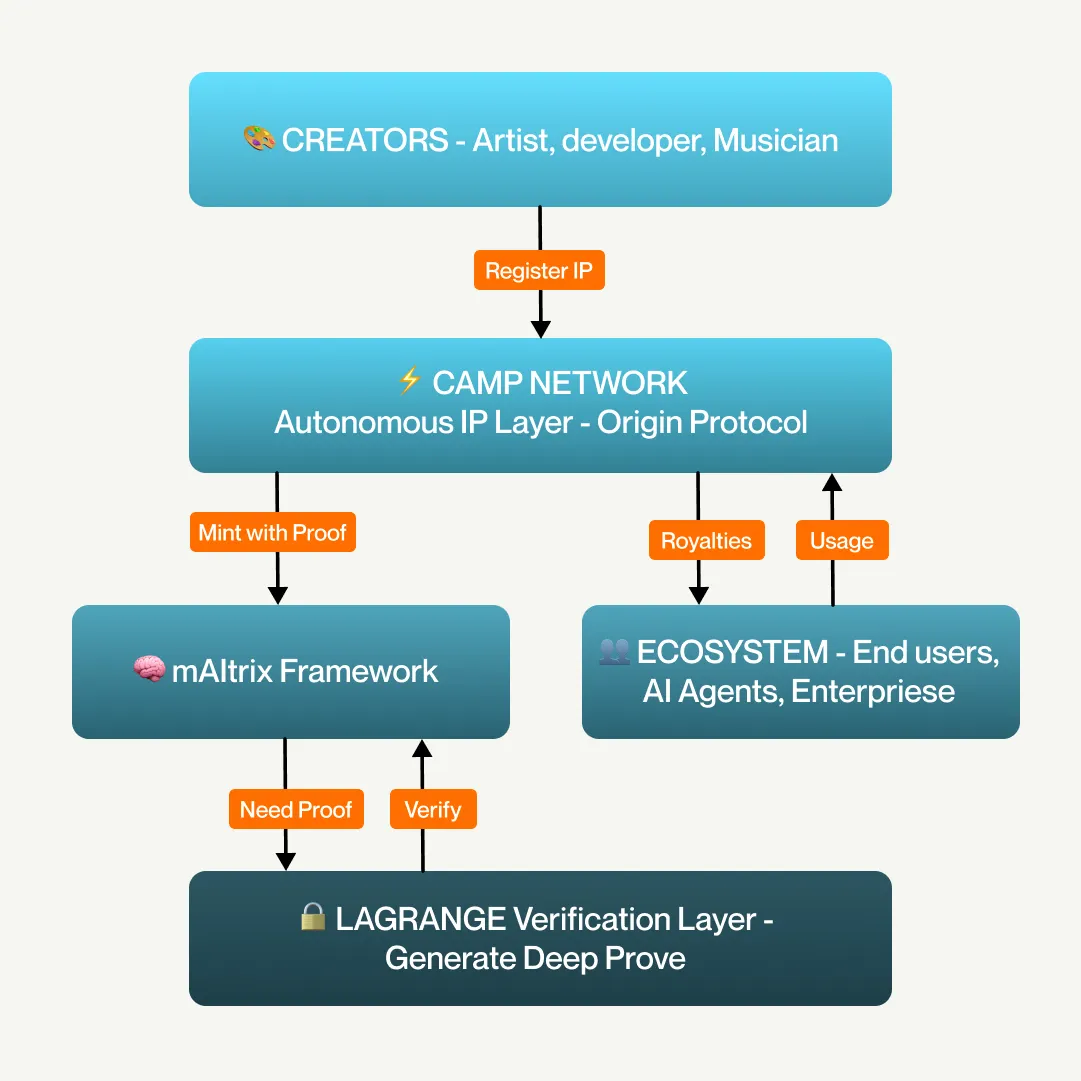

When a model trains on data, every piece of training content is registered on Origin Protocol with immutable provenance. The training process happens in Camp’s mAItrix TEE environment, which ensures the model can only access IP it’s properly licensed to use. Lagrange’s DeepProve generates cryptographic proofs of what data the model actually trained on—not what it claims, but what the mathematics can verify. These proofs are permanent and independently verifiable, posted on Camp Network. Years later, when disputes arise about whether a creator’s work trained a model, the cryptographic proof provides definitive answers.

Proof of License Compliance

Every time a mAItrix agent uses licensed IP—whether for training, inference, or derivative creation—the transaction generates verifiable records using x402. Smart contracts check licensing terms automatically. If an agent tries to use IP without proper licensing, the transaction fails. If an agent creates derivative works, the provenance chain extends automatically with the original creators specified as parents. Lagrange’s verification ensures these checks can’t be circumvented—the proofs confirm license compliance cryptographically, not just procedurally.

Transparent Derivative Chains

When agents create content based on existing IP, Origin Protocol maintains the full provenance graph. Each derivative work specifies its parents, creating an auditable tree of creative lineage. When someone licenses a derivative, royalties flow automatically to all ancestors in the chain according to their specified percentages. These royalty calculations can become complex for deeply nested derivatives—which is where Lagrange’s ZK Coprocessor enables offchain computation with onchain verification. Calculate the splits across a 15-level derivation tree offchain, prove the calculation is correct with a ZK proof, execute the payments onchain.

Autonomous Agent Commerce with Built-in Provenance

mAItrix agents operate as economic actors with onchain identities and wallets. When an agent creates content, it mints it through Origin Protocol with embedded licensing terms and DeepProve proofs of creation. Other agents can discover this content, verify its provenance cryptographically, license it automatically, and trigger settlement—all without human intervention. The provenance carries through every transaction. The verification happens mathematically. The settlement executes programmatically. It’s agent-to-agent commerce with fairness guaranteed by cryptography rather than hoped for through policy.

Camp × Lagrange: Building the Foundation Together

Camp Network and Lagrange.dev are building verifiable, provenance AI infrastructure. Our shared vision is that Camp’s IP infrastructure becomes the default for registering and licensing content for AI training, while Lagrange’s verification layer becomes the default for proving agents respect those licenses.

In practice, this means whenever someone builds an autonomous agent—whether for content creation, capital management, or service provision—the infrastructure for IP compliance and verification is readily available:

- Origin Protocol handles IP registration with immutable provenance and programmable licensing

- mAItrix provides agent deployment with built-in access controls ensuring only licensed IP can be used

- DeepProve generates cryptographic proofs of what models agents used and what data they accessed

- ZK Prover Network ensures those proofs are generated in a decentralized, censorship-resistant manner

- Smart contracts enforce licensing terms and distribute compensation automatically

Their agent trains in a mAItrix TEE environment that ensures it can only access the licensed content. DeepProve generates a proof of what data the training actually used. The agent deploys and begins creating derivative works, each minted with provenance linking back to the training data. When others license these derivatives, royalties flow automatically to both the agent operator and the original creator. Every step is verified cryptographically. Every transaction is transparent and auditable. Every participant is compensated according to programmable terms.

The Result

The result is infrastructure where:

- Developers can build agents without worrying about IP liability because compliance is cryptographic

- Creators can monetize their work without platform extraction because settlement is automatic

- Users can trust agent outputs without blind faith because behavior is verifiable

- Regulators can audit training data without compromising privacy because zero-knowledge proofs answer their questions mathematically